All of us manifest cognitive biases. Cognitive bias commonly termed as human bias is a systematic thinking pattern that affects our judgments and decisions. One of the common examples of this bias that influences most of us is blaming external factors when something goes wrong. These human biases can be projected to AI technology even with good intentions. So our human biases become an element of the technology we fabricate in several ways.

Artificial intelligence is being used extensively in many sensitive domains such as healthcare, criminal justice, and human resource. This raises the question in society to what extent these human biases are assimilated in the AI systems and how to reduce them. Human biases can slide into AI systems in many different ways.

AI algorithms are designed to make decisions on the basis of data fed into the systems. These data sets can incorporate biased human decisions or some historical data reflecting social inequities. Amazon faced a similar issue with its experimental recruitment tool that used machine learning, a subset of AI, to give scores to job applicants. Amazon dropped this experimental hiring tool as it showed biased against female candidates. The tool screened applicants by noticing patterns in the resumes submitted over a span of 10 years.

Biases occur all due to our influences. Human biases reduce the potential of AI systems leading to inaccurate results. To start with mitigating AI bias, we must improve the human decision-making process. AI algorithms and datasets can be inspected for bias to improve outcomes. Researchers are developing technical ways to define fairness in order to make AI systems more accountable and responsible.

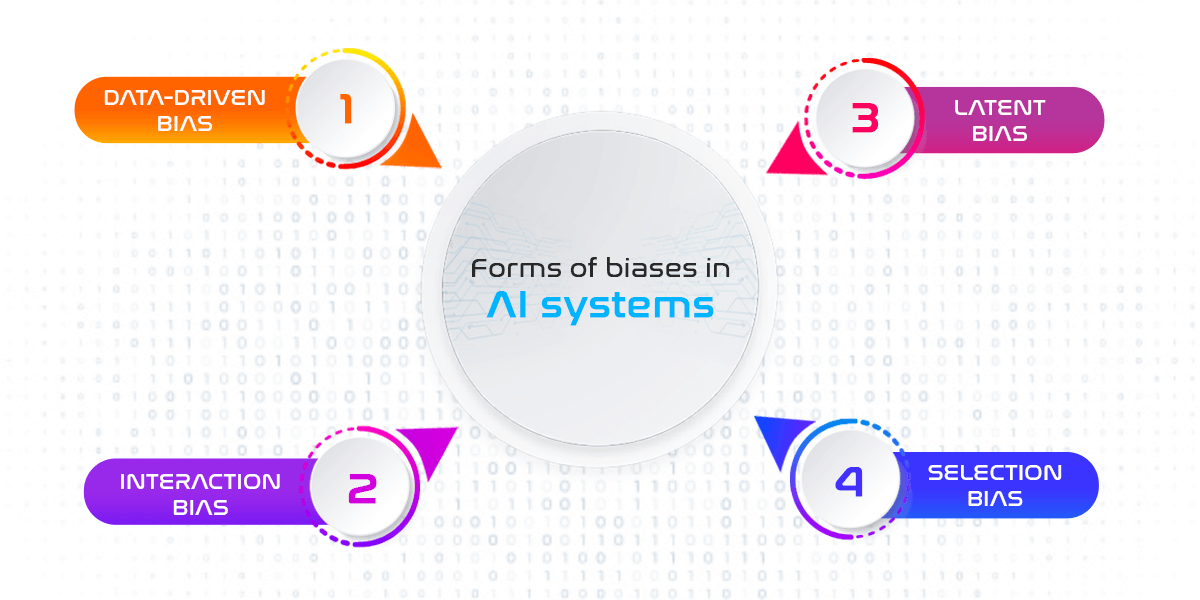

Forms of biases that can be seen in AI systems

Data-driven bias: AI systems produce output by observing patterns in the data it receives. If the data itself is biased, the outcome will be to the same extent so.

Interaction bias: Some AI systems learn through user interaction. Interaction biases are the result of the biases of the people interacting with the AI systems or algorithms. A good example of this is Microsoft’s Tay, a twitter-based chatbot, which was designed to learn from its interaction with users. Microsoft scrapped it within 24hr after it had become racist due to the influence of a user community.

Latent bias: An AI algorithm may produce inaccurate results based on historical data or existing social inequities. This leads to latent biases.

Selection bias: This bias occurs when the dataset available contains more information about one subgroup than another. In this situation, the system will give more preference to the dominant group.

What companies are doing to keep down AI bias?

Giant tech companies including Google, IBM, Facebook, and Microsoft are deploying AI technology across their operations. Amid increasing concerns circling around AI ethics, organizations have now turned their focus on the impact of human bias in AI systems and minimizing them. More transparency in the operations is needed to ensure bias-free AI systems. Following are some of the fairness programs launched by organizations in order to keep the AI technology bias-free:

- Google has designed the ‘What-If Tool’ to inspect AI models and detect biases. This tool can help you conceptualize your datasets and in maintaining AI fairness.

- IBM’s AI Fairness 360 toolkit helps you analyze, report, and reduce any type of discrimination and bias in machine learning models and datasets.

- Many academic fairness-in-AI projects are under progress funded by the National Science Foundation in collaboration with Amazon.

- Facebook AI is using a new ‘radioactive data’ technique to detect if a dataset was used to train a machine learning model. It helps keep a track of all the images used in a dataset and improves output.

AI systems make decisions that affect people. Companies must train AI to be fair in dealing with all sorts of matters. As rightly said, with great power comes great responsibility. As AI extends its roots deeply into the society, the need for more fair and responsible AI will rapidly develop.